062 #ai #opendebate

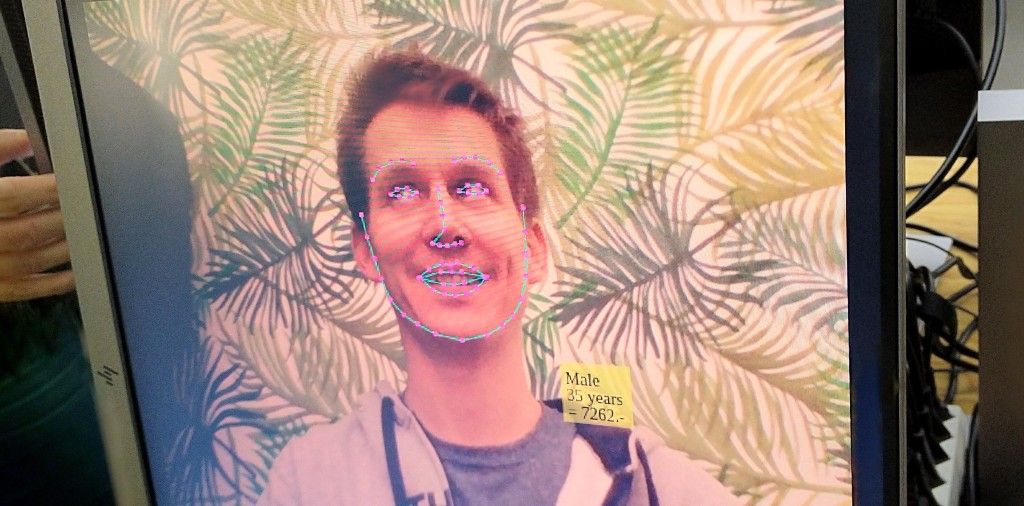

"I am what I earn?..." ironically asks a digital mirror in a temporary installation at the Effinger coworking space aiming to artfully demonstrate a range of applications for Artificial Intelligence. This automatic salary estimator was the most popular part of my performance in an evening event at Effinger, which included two hours of talks and group discussion (streamed on YouTube) organized by the Swiss Institute for Global Affairs, and a set of A.I. powered experiences.

Friday's event warmed me up for the Applied Machine Learning Days starting in Lausanne this weekend. Here is how the organizers described it:

A.I. - salvation or curse for mankind?

Our Open Debate leaves simple narratives aside to ask the question: where is AI today, where will it be tomorrow ... Humanity will be changed by artificial intelligence, as will the way we live together in society. Possibly the language, the idea of human intelligence, the value of being human will change. Ethical and philosophical questions will play an increasingly central role. The socio-technological and economic changes associated with the keywords Industry 4.0 are just as relevant. Cyberanthropology offers answers that have often been ignored in the debates so far. In addition, there are fundamental security policy issues, such as whether AI makes our society safer or whether we lose our resilience as a result. In an interdisciplinary and participatory dialogue, we want to bring together experts of diverse perspectives with interested civil society.

(my own translation from the original german)

During the talks, I wore a Muse neural headband to measure and statistically analyse my brain's activity levels at intervals. This biological data could be used in many ways - from enhancing my enjoyment of the occasion in real time, to augmenting my attention. And perhaps even for giving organizers or speakers feedback with the fine temporal granularity of website analytics. The headband provoked discussion about how far we are willing to go to let technology monitor our experience, interfere with our physical sensations and intelligence. To ask how much, indeed, we are willing to hack our brains, and even further blur the line between "Artificial" and "Intelligence". For more depth on this subject see last year's paper on Bioinspired Classifier Optimisation for Brain-Machine Interaction by Jordan J. Bird et al.

My brain is evidently more active by the end of the event. The data doesn't lie 👼 pic.twitter.com/eeyLIcf72A

— 👄 (@sodacpr) January 24, 2020

Another part of my performance explored how A.I. is changing the way we are seen as freelancers or job candidates, reflecting on the question of the "value of being human", my own choices to work independently in a co-working space, and commenting on the concerning rise of A.I. tools in recruitment, as debated recently in the news.

So I prototyped an A.I. recruiter powered by open data. As a handy tool to prepare for a salary negotiation, I used SALARIUM from the Federal Statistical Office in the past to work out wage expectations based on my location, experience, and a bunch of other parameters. While useful in negotiation, it unfortunately does not have an API, but the statistical extracts available at opendata.swiss are from the same source.

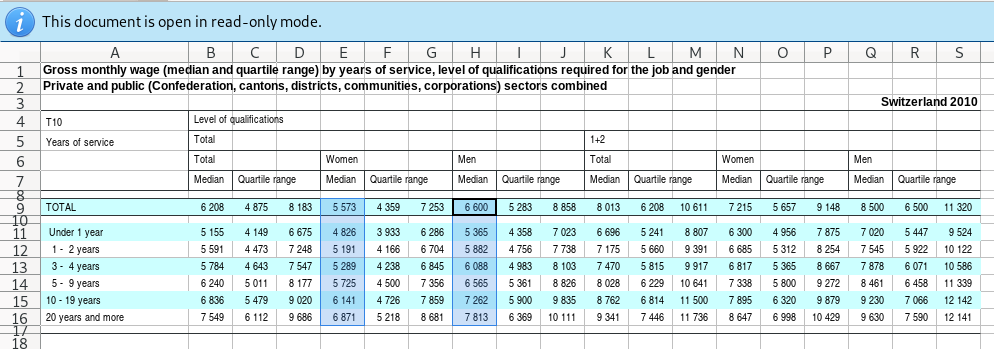

The dataset Monthly salary per region, work group, age and gender would have been perfect, but the age column is split across very large brackets. Instead, I used Gross monthly wage by years of service - Private and public sectors combined - Switzerland estimating for years of service based on the (grossly generalized) assumption that you would start working at the age of 19, achieving your career peak at 45. I distributed the median values for the (brutto) monthly salary within that range.

Although I first intended to use a Python-based convolutional neural network with OpenCV and a Raspberry Pi as we did in the Portraid Id project, I experimented with the browser-based Tensorflow.js and found it performing extremely well on real-time applications on my laptop. An open source API specifically for face detection and recognition called face-api.js by Berlin-based open source hacker Vincent Mühler, made it easy to get everything up and running.

Use open source #FaceRecognition by @justadudewhohax and data from @opendataswiss to anticipate your monthly salary, addressing basic questions of job security in #HelloCoworker pic.twitter.com/IeI7mCLBnr

— 👄 (@sodacpr) January 24, 2020

Although unlikely to be predestined for use in any job hunts, the result entertained and provoked dozens of people. Most seemed to be pleased with the detection, and the auto-salary led to some animated discussion, addressing some existential questions that confront us co-workers and careerists. That, and plenty of derisive remarks and general amusement. My modifications are open sourced here for anyone who wants to build on this. Or at least to tweak that winning eye-smile ^-^

The other projects on display:

ART SQOOL, an Art School simulation and drawing program by Julian Glander, a 3D artist based in Brooklyn U.S.A. With an art-trained A.I. as your faculty advisor, high-tech capabilities are used to objectively grade your work. This program represents a new frontier for education and creative crafts which we traditionally associate with skills that are safely in the domain of human competence.

RACE TO A.I. (source) In this game by Derek Shiller, a Web developer from New York, USA, you are the owner of a startup in the A.I. space. You are competing with other companies and organizations to be the first to generate strong artificial intelligence. If you are the first, your technological dominance will allow you to secure your vision of the good society for centuries! If you are not, your children will live in the world created by someone else.

ELON DANCES TO OPENPOSE. The video clip "Elon Musk pulls off dance moves at Tesla's Shanghai plant" by New China TV was fed into OpenPose, an open source "Real-time multi-person keypoint detection library for body, face, hands, and foot estimation". This used Machine Learning to analyse the video, and overlay a skeleton-like rendering of the movements. The movements are biometric information, unique to the individual. They have been extracted, aggregated, and uploaded in the public domain to encourage future generations of A.I. enhanced dancers.

My thanks go to Urs Vögeli and the rest of the Global Affairs team, to the panelists and everyone who came out to listen, talk and test A.I. You can find more links and images on a Twitter thread.

«Data is not neutral: it's always contextual, categorically designed, a cultural program.» Still, life's short, and we all want to have fun: so spend 5 minutes and a few brainwaves on this A.I. exhibit, where you can ... pic.twitter.com/eR8QMERiaI

— 👄 (@sodacpr) January 24, 2020

The works on this blog are licensed under a Creative Commons Attribution 4.0 International License

The works on this blog are licensed under a Creative Commons Attribution 4.0 International License