101 #postaldata

Put me in the shoes of someone being told to re-skill or up-skill. Go to a job fair, sign up to e-learning, apply to an accelerator to bootstrap your entrepreneurial drive. No time to live the life of a technological snail, just sprout wings, aim for the moon and tech up!

For most of my life I have felt like a Helicidae when it comes to the big races, pushing out any thoughts of running in some kind of marathon. The idea of stumbling around in a crowd of people, being tagged, tracked and measured, falling desperately behind... Yikes! I can relate these feelings of inadequacy with anyone struggling to sign up to a Hackathon. There is a happy end to my story at the bottom of this post, but I am here to talk about another kind of runner.

As I jog along the forest path, I wonder if this is something like what many workers of delivery services are feeling right now, with post office duties and other jobs being transformed in the direction of digitalization, automation, and robotics. Among others, these are the official plans of the Swiss Post, announcing last week the cutting of 1/5th of capacity by 2028 (swissinfo 31.5.2024). We may hope that such decisions get weighed against the needs of the social contract, the service public, backed up by meticulous data and transparent facts.

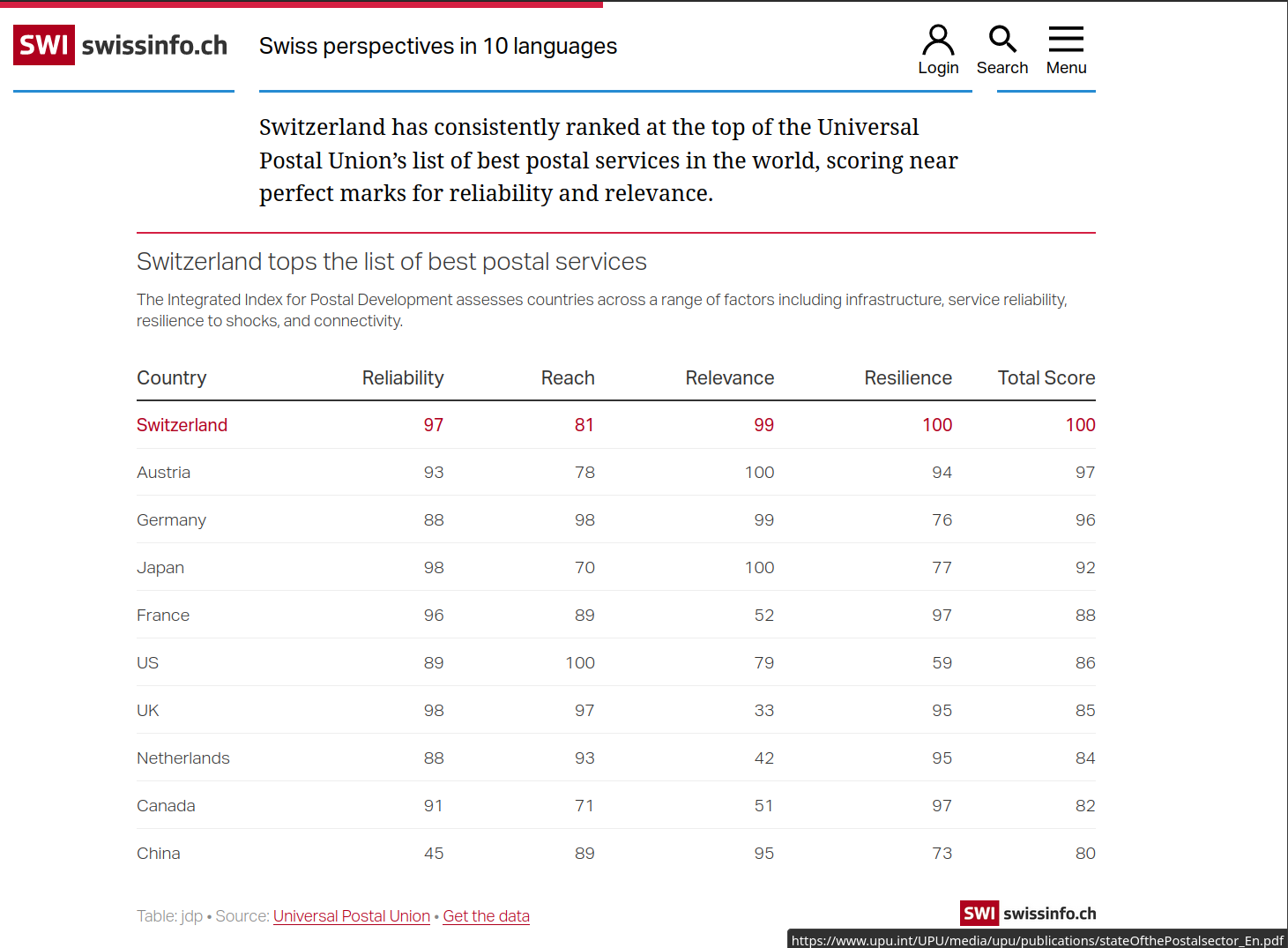

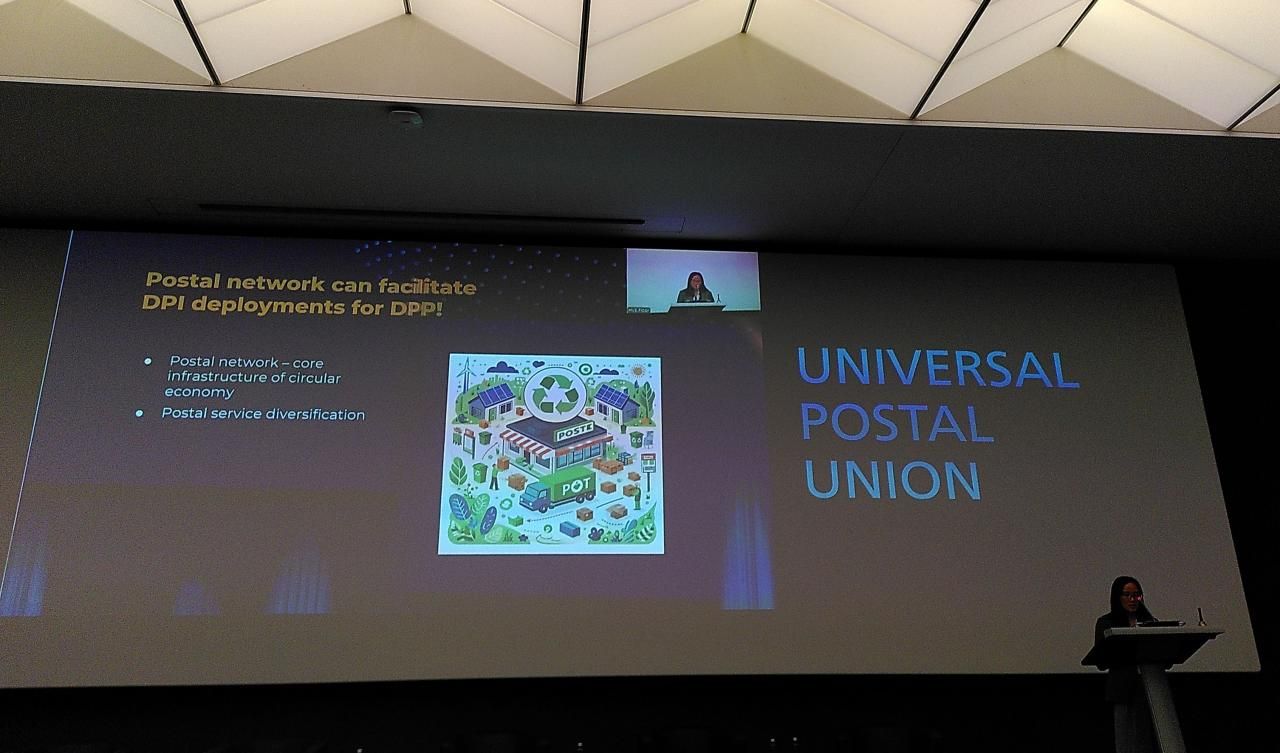

When it comes to keeping track of the global situation and setting standards from Reliability to Resilience, I know of no better institution in the world than the Universal Postal Union (UPU), or as we call it locally, the Weltpostverein. Last year I was one of the organizers and mentors of the first UPU Postal Hackathon. Talking to reps from the international and national organizations, I realized there that my understanding of the work of the post barely scratched the surface of the field.

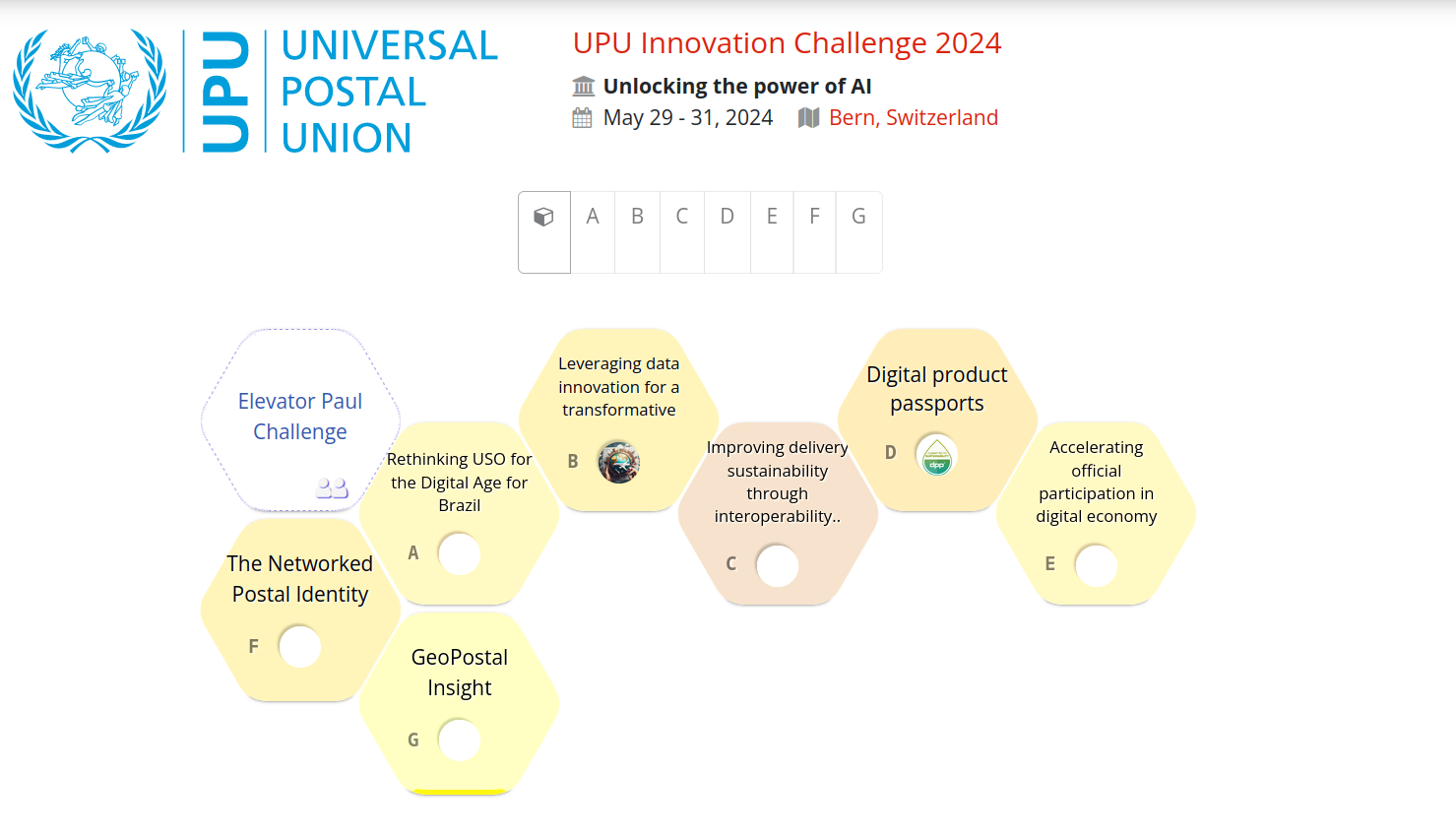

This year, I wanted to step up and participate more directly. Partially out of a desire to take the time to dig into some data, and to see for myself what the community is challenged and fascinated by. So I applied to join as an individual contributor to this year's UPU Innovation Challenge, and was among several hundred candidates who were filtered and matched into teams by Horizon Architecture.

The unique setting of the event and extremely professional community behind it already gained my respect last year, and was confirmed again. It would have been great to have the Opendata.ch team on there, as well as others from the Open Knowledge community. Many of my colleagues there were busy convening at csv,conf,v8 in Mexico (talks soon on YouTube). I always try my best to act as ambassador of the global open data and open source community - and even had some open hardware along, in case we got into a discussion of edge nodes and ground level data collection. But: especially given the backdrop of the 32nd Conference on Postal and Delivery Economics, our focus was squarely on sources of high level economic indicators and regional statistics.

What is, exactly, an Innovation Challenge: is it a hackathon, or something else? We had a brief public discussion of this right at the beginning. There were arguments that in the more academic context of the parallel conference, the word may be a detractor, for those who might otherwise lack the confidence to contribute. I can understand that rationale, though to me the idea of being an "Innovation Challenger" seems no less ambitious. Indeed it is Open innovation that I see a precursor and platform to many hackathons. It was made clear to us that the focus is on A.I. capabilities, and that extra support from AWS was arranged to make sure no scarcity of hacking skills would stand in the way of progress.

With encouragement of the organizers, we again uesd the Dribdat platform set up by Opendata.ch last year, to bring continuity and Swiss-made timekeeping to the proceedings. The participants were not required to use it, but I hope those that did were able to get efficiency gains from it. A study of the event was being done by the European University Institute, which involved several surveys, interviews, and accessing Dribdat APIs, and it will be interesting to hear their opinion.

In my introduction, I shared a bit of historical context about the word Marathon. It was very spur of the moment and tongue in cheek to suggest a etymological connection to the French expression se marrer. Indeed, a better historical reference is the town of Marathon in Greece, and the story of Pheidippides, the original marathon runner (British Museum). There must be some traditions with running and the postal services, at least as suggested by the Guinness World Records title for the Fastest marathon dressed as a postal worker, whose rules require "full postal uniform of trousers, shirt, tie, jacket & cap & post bag with 10lbs of weight inside" (Quirky Races) 🎽

Back to Bern. We got together on Wednesday evening to find our team, get acquainted, and hear the challenges. It was great to be welcomed by the conference organizers and participants, heads of the hosting organisation, as well as the Alec von Graffenried, our current Mayor - whose remarks about Bern as host city of the UPU and other international organisations I took to heart. Later we exchanged some ideas on how to be a good digital host city.

PowerPoint problems hinted already at some of the IT challenges we were going to have to deal with. I missed seeing a completely AI generated challenge, such as the one submitted by Microsoft at the GovTech Hackathon this year. Nailing the kickoff with the right delivery vehicle helps to start the sprint on the right foot.

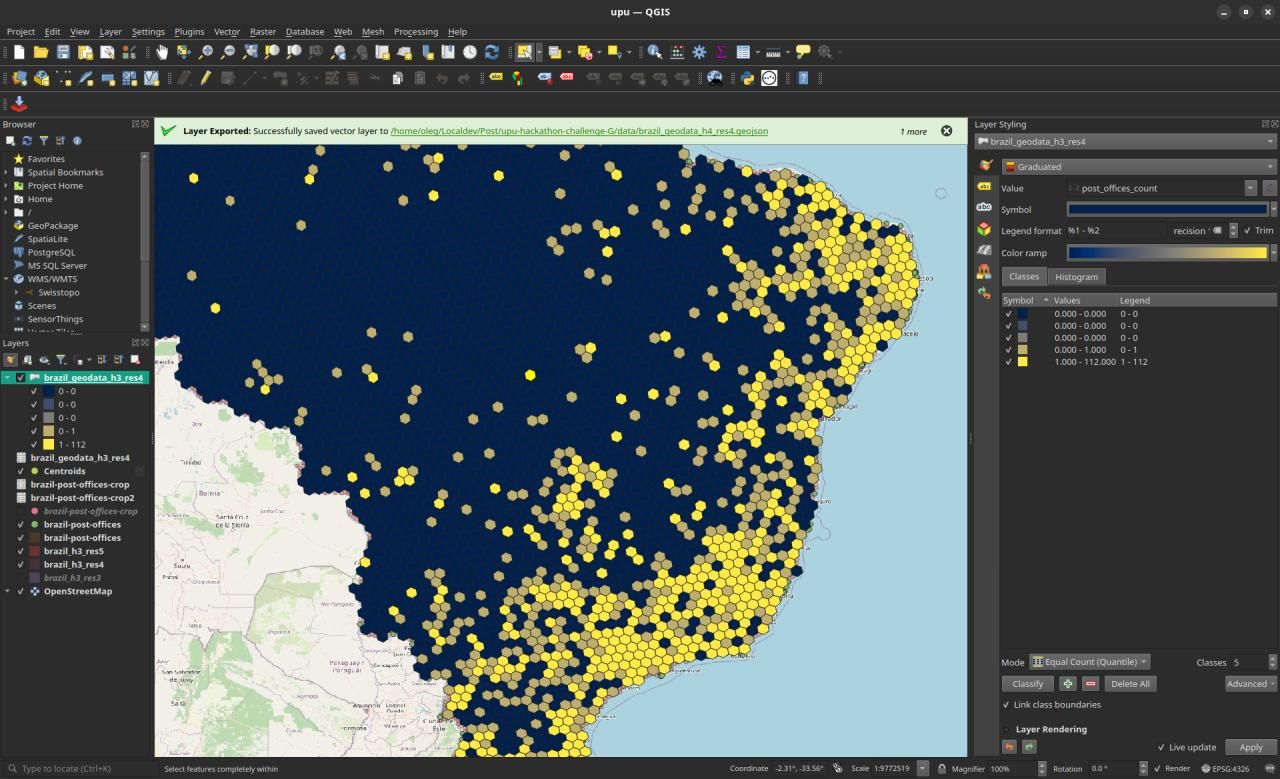

It was dark when I got home, but I felt energized and already involved in the challenge assigned to me and my small team by the host AI. We were still emailing ideas until late into the night, and I was feeling less snail-like already (though I am very sorry to any snails I may have stepped on, as it really was pitch black!). The initial task we set ourselves to was to try to get a hold of datasets relevant for analysis of regional disparity of postal services and complementary infrastructure. The challenge fit quite well my professional experiences with geodata, and I tried to apply myself as GIS technician, OSM contributor, and data engineer.

The next day I had a lovely birthday breakfast with my loving family, who has been supporting countless hackathons over the years, and knows all too well that during these times my body is in one place, and most of my mind is in another. This was followed by a strong cup of very Italian coffee with friends and peers in the CH Open network in Bern. We talked briefly about the Parldigi meeting the previous day which several of them attended, and they parlayed to me remarks of the Swiss Post that helped me to reflect on the cooperation and frictions between the postal and civic tech community.

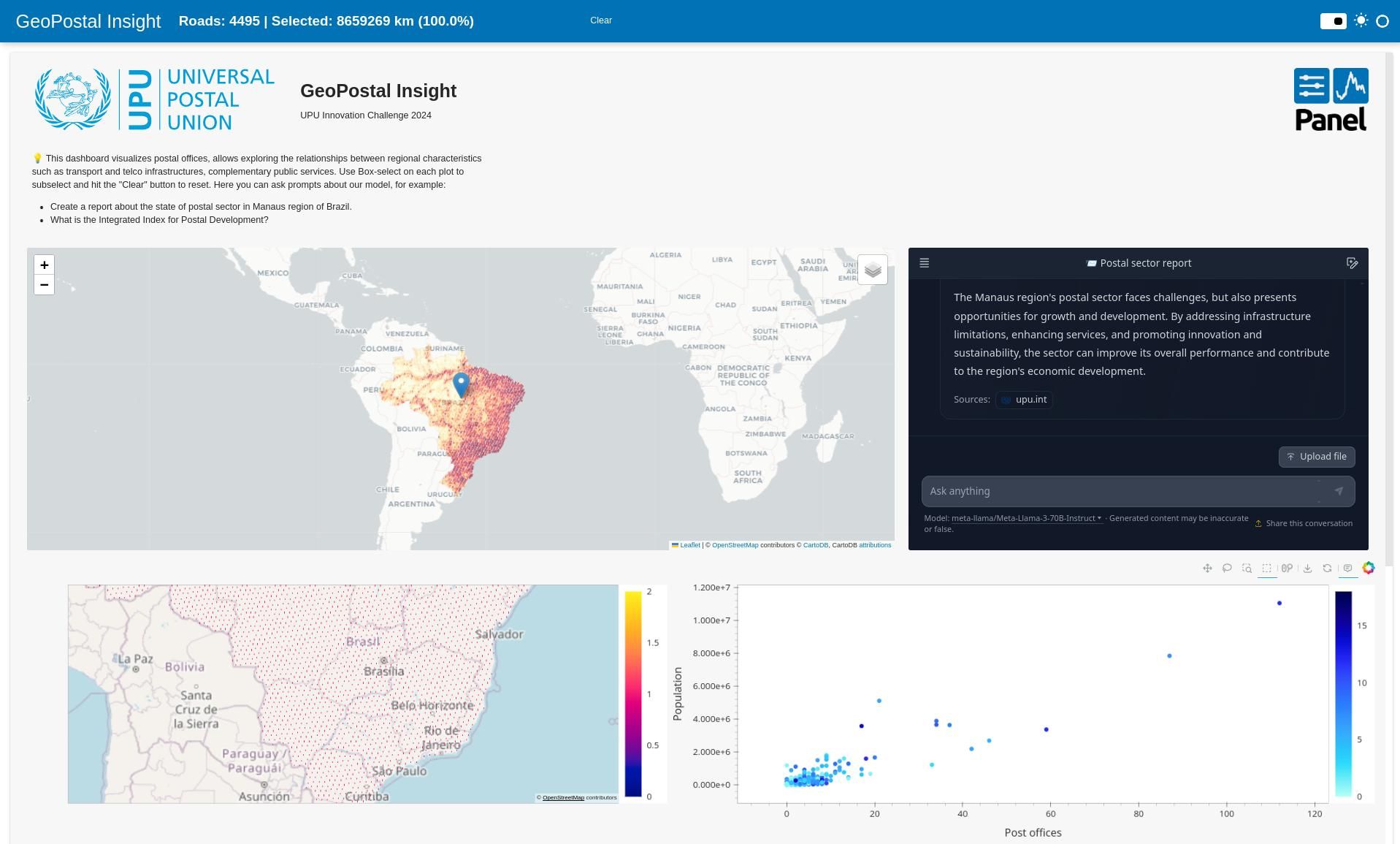

It is day two, the team is assembled and boosted by an IT Solutions Architect from Amazon (thanks Jorge), who helped us get access to practically unlimited cloud resources. Working with a data engineer from the Brazilian post (olá Rafael) and an analyst from the UPU, I quickly sketch and then wireframe an app that should help them in the problem domain. We then turn to AI for help, and explore both data aggregation and UI generation through prompts.

After an absolutely delicious lunch, during which I engage with the UPU and ITU staff chatting in French, Spanish and English, and get exposed to some of the exciting opportunities their job entails (global impact, vast technical access & learning possibilities), as well as the challenges (administrative and legal complexity, sometimes frustratingly slow pace, the precarious state of world security). I am getting along fabulously with my team members at this point, who are just a delight to sit with. My only regret is that due to the torrential rain storm outside, we can't use the rooftop terrace that we so much enjoyed last year.

Speaking of jobs, the ITU is hiring – as are the Swiss Post, La Poste, Österreichische Post, PostNL, Japan Post, Canada Post... I could not find a recruitment portal from Correios in Brazil, but there is interesting staffing data online. Get your 💼 and get to work with the 📦📦📦 experts.

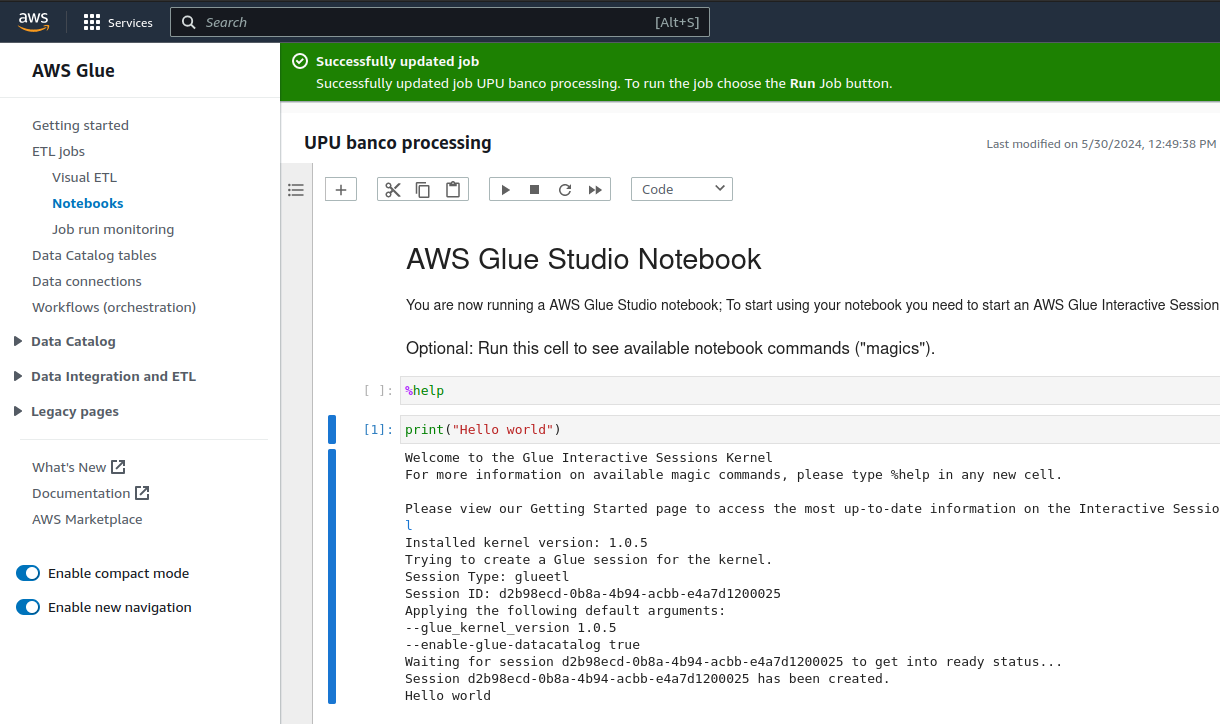

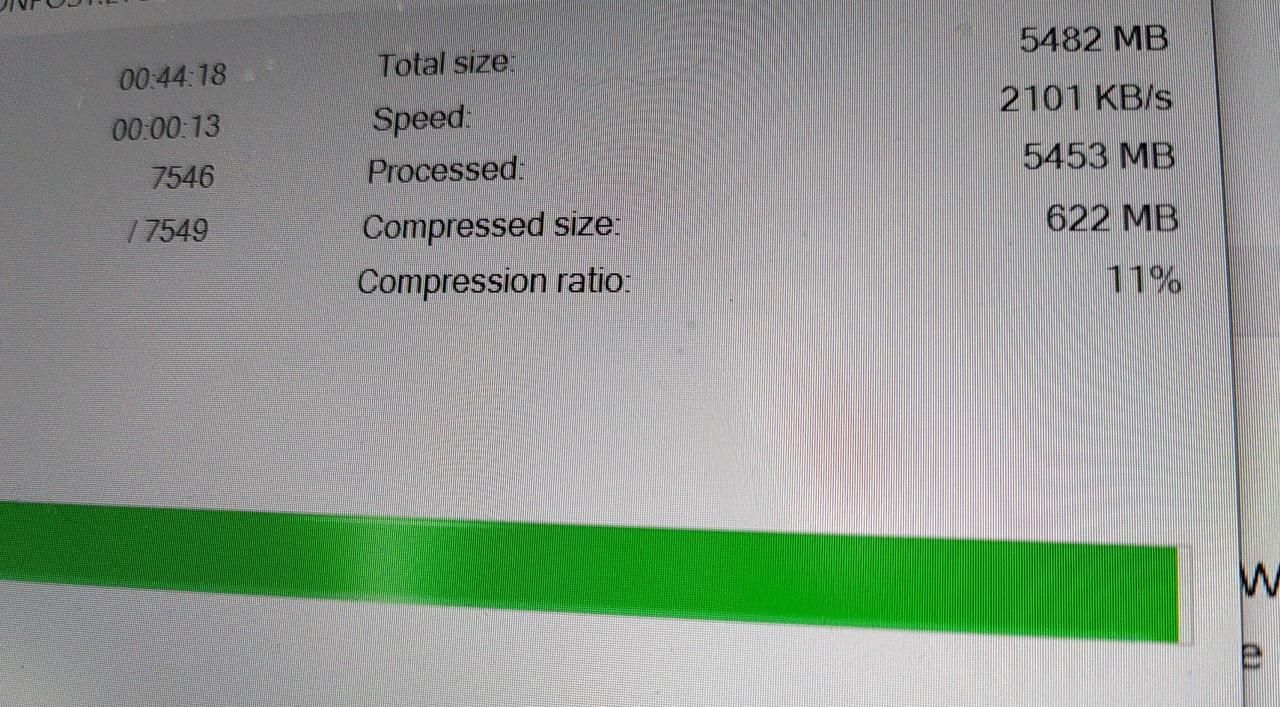

As the afternoon progresses, we are challenged by poor data availability about our chosen geography, and while we wait for the various transfers to complete, we quickly evaluate the development options. While a couple of us are happy to prototype in QGIS and Jupyter, a web based prototype is sorely needed. Having a shared data science environment should have been the first thing we did. And the AWS team did have everything ready for us: with relatively one-click access to notebooks and virtual servers. Nevertheless, we still needed hand-holding to find our way around the consoles, things did not immediately work the way they were expected to, and after an hour muddling about with IAM roles we still were not able to effectively collaborate within the sandbox. So we used a local data science environment, limited of course to our laptops computing power, synchronizing our work through GitHub. Later on we went back to AWS for the presentation, and learning about their products like AWS Glue and Partyrock, helped to guide our higher-level thinking.

A source of frustration to me is that little of the data we could work with was open. This is a topic that we must continue to advocate on across the open data community, as the trend to restrict data sources seems to be global. The Brazil post has PHP/HTML databases which are hard to scrape, and almost no proper data tables online. We relied on UPU aggregates almost a decade out of date, as well as their more current statistics portal, which is all not openly licensed. It's a similar situation in Switzerland at the moment.

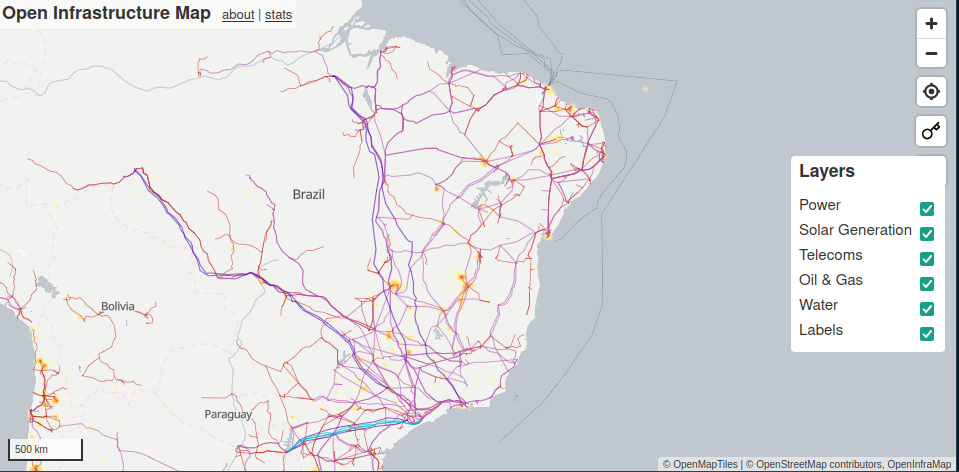

For this reason I would say that the main useful source of data in our project is OpenStreetMap. One quick hack I worked on, is an adaptation of the excellent Open Infrastructure Map, adding the post offices to which seems straightforward. The continued importance and huge impact of OSM around the world was again underscored by our work. We invited everyone to join Swiss PGDay at OST next month, an event organised by closely liked communities of a technical focus. We also followed a QGIS webinar happening the same day, and talked about supporting OSM through Mapathons with postal workers.

Once we finally had the data, and the metadata (which was a whole other story), we spent a few hours trying to put it together. Typically this is a quick visual exploration in a spreadsheet or QGIS, followed by Python code to set up an ETL workflow with Pandas. I fired up Apache Airflow, but did not make much use of it this time. Our challenge owner was keen to use something like R Shiny, and so we picked a relatively recent platform called Panel for our data exploration.

This tool allowed us to work within Python data science notebooks in Jupyter, and then almost magically transform them into responsive dashboards. In hindsight we should have taken more note of how unstable the tool is, as we lost some time dealing with bugs. But in the end it was fun to work with an ambitious new open source project with very responsive maintainers. We used their Glacial data example based on Bokeh as a bootstrap, and found everything mostly well documented. Just don't get confused by the differences in the style and structure of the various widget frameworks.

One of our team members had to leave to Norway, but was replaced by another coming from Geneva the next day. We started feeling a little bit overwhelmed on Friday morning, partly because we shared a table with another team whose frustrations had a bit of a spillover effect on us. But it was also because our newfound relationships were at the highest tension point during the event: there was still a day of work ahead of us, and each person had somewhat contrasting ideas of how to spend it. Without the upbeat mood and leadership qualities of the most senior member of our team (not me, this time!), we might have easily fallen apart.

Lunch was again quick, light yet nutritious, and I got to know my new colleague from the ITU much better over helpings of couscous and rice pilaf. Our relaxed conversations eased the tensions and we got into the final sprint with renewed camaraderie and focus. Dribdat was up on all the screens, helping us to keep pace, as we worked on a presentation deck with Stable Diffusion imagery, crunched final data analysis in Geopandas, coded refinements to our Panel dashboards, ran an AWS Glue deployment of a barebones Ubuntu server, and trained an AI assistant with Hugging Face to read through UPU legal texts ... all at the same time!

In the final minutes we had no idea whether the project would come together, and it is exactly that thrill of hanging together on a virtual cliff side that keeps me coming back to Hackathons. We were right on time though, everything uploaded and working (just don't sneeze!..) as we made our way downstairs to the great conference hall where the event started, ready to listen and learn from all the other teams, and deliver our own hacker package as the last team on the roster.

Our pitch went well despite zero practice, with huge thanks to everyone for a very professional preparation. The one hiccup was at the very end of our demo. We ran into a glitch that prevented our chat bot from working on stage. However, we shared a QR code and link, where participants could try the demo on their own smartphones or laptops.

(Thanks to all the teams presenting for their compelling stories and results! I'll update this with a link once an official recap is available.)

The afterparty in Wankdorf brought everything into focus with a nice closure apéro. The hackathons, the politics, the great task of digitalising and governing the communications of the world. It is the Swiss Post's 175th birth-year, the UPU's 150th, and we kept speaking, debating, and promising to continue to support each other late into the evening.

With this I'd like to extend gratitude to my team mates for walking alongside me on this meandering path and sticking through to tell the tale. Hopefully, you will join me on a real hike through the Alps or the Andes soon, and that our efforts will help you in your further journeys. I know that at least one of us plans to present the project again soon, at a conference in Africa.

Let's take a moment to think about these people keeping the most remote village as well as our whole planet connected: the Weltpostverein, masterminds of standardized worldwide delivery! When everything is open source, documented, repeatable, testable, and there to be built on further – that is when we can be resilient as a connected planet. Can our dashboards help keep improving the reach and public value of the postal service? We cannot influence the decision to hire or fire, but our data can contribute to a calm and considerate logic. It is our hackathon passion that, hopefully, contributes to an ethical and humane application of such tools.

In spite of all the abovementioned qualms of public running, this Friday I completed my first run, a nighttime "experience" through Biel / Bienne with my running buddy Ernie. If anyone needs our speedy legs to run a package across town, just put a stamp on it 😅

The works on this blog are licensed under a Creative Commons Attribution 4.0 International License

The works on this blog are licensed under a Creative Commons Attribution 4.0 International License