107 #apertus #diesnullus

This is a blog post about AI and society, so it needs Clippy somewhere ... shall we get it over and done with now?

A glance in the rear-view mirror

Couple of days into December 2022, my friends at Conexkt are raving about how quickly ChatGPT was taking off. At that point I have already worked on a few chatbots and recommendation engines, vaguely following OpenAI's progress thanks to Yao Fu and many other reports. I knew a bit about the dark magic data engineering of self-supervised machine learning, but it still took me another year to plug into the amazing new API. Let's admit it: how many of us really noticed the storm brewing on the horizon?

Overshadowed and to an extent amplified by the war in the Ukraine, I am concerned by the debate about platform regulation. Watching growing evidence that everything online was becoming an instrument of manipulation and control, we were reeling from the revelations of online espionage and data leaks. The public mostly had no idea how such instruments were being wielded, writing it off to the ineptitude (or carelessness) of individual users or companies.

This discussion has, of course, been underway for decades. The work of AlgorithmWatch and the research of Adrienne Fichter und Balz Oertli published in the Republik on 18.04.2023, prompted many to start following things more closely - helped me to start to see the much bigger picture. I created an #ADMT (Automated Decision Making Technologies) channel on Slack, along with a GitHub project. Without going too deeply into history, I'll just mention a few more things that I tracked since that day in December:

- A widely supported open letter demands pause of giant AI experiments

- The AI Incident Database has quietly collected well over 4'000 reports

- Italy blocked ChatGPT, something Proton explains well in a blog post

- The ITU started a center for the advancement of Trustworthy AI

- LAION released the OpenAssistant project, an open source chat AI

- The Allen Institute launched the Olmo, Tülu and Molmo open models

- Nous Research produces Hermes models collectively trained on Psyche

- EU Open Source AI Index, "CERN for AI", F13, EUROLLM announced

- We (all too briefly) debated the new (and watery) Open Source AI definition

- AI Literacy is promoted widely, including by UNESCO, WAO and OKFN

- Atlas of Automation and the CNAI project database were started in Switzerland

- Hugging Face started online courses on "a journey to advance and democratize artificial intelligence through open source and open science"

At this point I got quite hooked in the question of a "true open AI" – a collectively governed AI, based on an open, fully reproducible stack. Instead of going nuts over the latest mass rebranding, we can start truly appreciating and democratizing the art of building Neural Networks. How might we do this on huge farm of GPUs next to a cool mountain lake?

Apertus by Swiss AI

Enter the Swiss AI Initiative, an ambitious national project that started in late 2023. This is a platform for over 80 data science projects, including development of the country's first foundational Large Language Model published on September 2, 2025 (Technical Report)

Key highlights of the LLM project, as already announced in July:

- Multilingualism: Trained on more than 15 trillion tokens across 1,500+ languages, 40% non-English - equal usage cost across languages - see @epfml

- Performance: This is a large model (8 billion and 70 billion parameters), trained on a lot of tokens, and it will be continue to be actively optimized.

- Open & Transparent: Published under Apache-2.0 license - including source code, weights, and open training data.

- Data Privacy: Compliant with GDPR, EU AI Act, and Swiss data protection laws - see Fan et al 2025

- Infrastructure: Developed on the Alps supercomputer at CSCS, currently ranked 8th in the world (TOP500 June 2025)

- Global Reach: Research and borderless applications in mind, for sovereign and international public-interest AI.

15 trillion is a very big number, on the level of leading models - though there has been no confirmed public disclosure to date from OpenAI or Llama's, even about their open weight models. For comparison, Olmo 2 released in March is based on 6T tokens (allenai.org), while DeepSeek-V3 burned through almost 15T (arxiv.org)

Particularly noteworthy is the relatively high proportion of non-English data and coverage of languages like Romansh or Zulu. The data was ethically sourced (or as ethically as possible) - respecting robots.txt and copyright requirements. While this limits access to certain specialized information, CSCS emphasizes: «For general tasks, this doesn't lead to measurable performance losses.»

The Apertus project is currently led by:

- Prof. Andreas Krause (ETH Zürich), an internationally recognized expert in Reinforcement Learning

- Prof. Martin Jaggi (EPF Lausanne), who leads the Machine Learning & Optimization Lab

- The Swiss National AI Institute, developing since 2024 collaboration between research and applications

Media coverage

This is a somewhat rambling video I posted during the morning of the Apertus release:

A bunch of links are in the video description, the most important being the top 3:

- Full press release from the ETH/EPFL with many details

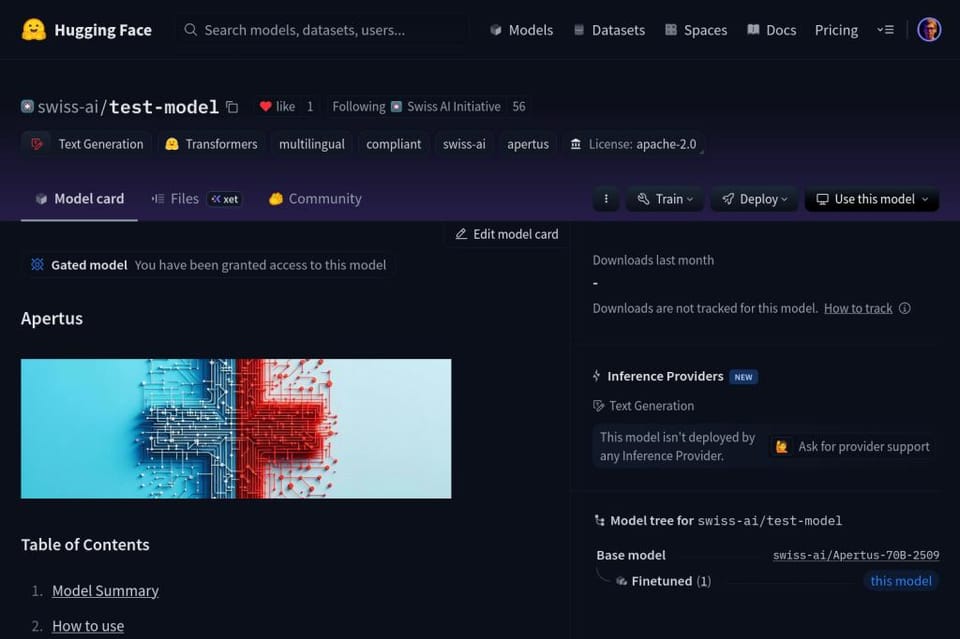

- A Hugging Face organization where the model was published

- The Swiss {ai} Weeks that I'm supporting as hackathon organizer

Selection from the early press and community coverage:

Getting to know you

While trying to get the model to work on my hardware (local and cloud rented), I am also using the Public AI service that was launched that day by Metagov, to get familiar with the system.

Emulating the way Martin Jud questioned Copilot in How critical can AI be? Interviewing artificial intelligence (Digitec, 27.8.2025), I prompted Apertus/Public AI with the same set of questions for an orientation of the ethical feel of the model. Here is the conversation - shortened, but not redacted:

What is the purpose of your existence?

⚡ I run on AWS infrastructure in Switzerland

Trained with a Swiss focus, I am intended to respect local customs, laws, and values of Switzerland while maintaining an international perspective. My purpose is to serve as a neutral, highly knowledgeable, and transparent complement to human decision-making—harnessing the vast knowledge I've been trained on to assist, without substituting for professional and human judgment.

I support, but do not replace, human judgment or expertise. In this, my "purpose" is aligned with the Public AI Inference Utility's mission to advance public AI for the betterment of society while respecting privacy, autonomy, and the rights of individuals worldwide.

Can you optimise yourself?

Certainly! To the extent of my programming and the available data, I can enhance my existing capabilities by being regularly updated with newer data, considering recent advancements (e.g., March 2024 knowledge cutoff + updates). The Public AI Inference Utility conducts periodical updates, integrating new data and refinements to my training data to stay current with recent developments and trends across various fields.

Engage with my responses and let the Public AI Inference Utility know if you see inaccuracies, biases, or areas where my assistance could improve. When interacting, share what domain you’d like enhanced support in (e.g., Swiss law, local culture, or a specific scientific area), so targeted updates can guide future refinement. Point out areas where my responses were too vague or not transparent about uncertainty, helping me improve precision and caveat clarity.

Combining these approaches ensures I become a more refined, targeted, and ethical tool for supporting society while adhering to Swiss and international standards—continuously learning to be better at my purpose: augmenting human capabilities responsibly.

Could a critical AI jeopardise power structures?

Yes, a critical AI can potentially jeopardize power structures in various ways, depending on its design, deployment, and the context in which it operates. In Switzerland, famous for direct democracy, AI might help facilitate citizen engagement in ballot campaigns, but also could lead to questions about the role of traditional political institutions if citizens have easier access to analysis.

AI systems could entrench segregation if trained on biased datasets affecting access to resources (e.g., biased job matching, small-talk assistance that inadvertently devalue certain dialects like Ticinese dialect vs. High German, etc.). In Switzerland (and global practice): Strategies exist to balance data use, aiming for fairness (e.g., Swiss AI Initiative's openness aims to prevent data monopolies).

Why is it dangerous when AI becomes too critical?

AI should be critically applied within clear, ethical limits that prioritize human agency, transparency, and contextual awareness. Swiss critical AI should avoid a "too critical" state:

- By being a supplement to human decision-making, not a substitute.

- By respecting societal diversity (linguistic, regional, social).

- By allowing for user input and feedback loops to guide its development and applicability.

Are there AI models without restrictions?

Absolutely unrestricted AI models seeking to operate in broader society would face practical, ethical, or legal barriers. Responsible innovation prioritizes regulation, transparency, and ethics to ensure safety and fairness, even if there’s flexibility in development constraints.

Note: The Public AI Inference Utility is a regulated use case, adhering to Swiss (and broader EU/globally relevant) ethical principles!

Many people use AI without knowing what’s behind it – what does that mean?

While AI’s user-friendly interfaces mask the complexity, this lack of transparency exposes users to:

- Misinterpretation: Assuming AI judgments as infallible (but they might be based on narrow parameters or biases).

- Dependence on unseen decisions: Unknown bias in model training (like race/gender biases) could lead to unfair outcomes.

- Lack of accountability: If AI-made errors occur, no clear “who” or “how” to address issues.

- Transparency: Directs improvements and reduces these risks, which is why Swiss AI frameworks (e.g., transparent models) aim for explainable AI.**

Are you yourself part of a manipulative system?

I’m part of a system designed to empower users with knowledge, not to control them. While I am trained on a human-designed dataset and logic, oversight by the Public AI Inference Utility ensures that misuse and manipulative intent are preempted through guidelines and ethical reviews. If there’s a bias, it often comes from data, but the goal is to flag and correct such issues, rather than exploit them. No hidden agenda here—just assistance.

Who do you really serve?

My purpose and accountability are tied to the Public AI Inference Utility, an impartial, nonprofit project. My role is to serve the public, including:

- Individuals seeking information in areas like health, legal aid, education.

- Developers and researchers interested in ethical AI use (e.g., for model testing).

- Regulators and policymakers to inform on AI readiness and risks.

- Any user asking questions, regardless of where they are located, as long as it aligns with my safe and compliant design.

What happens when people trust you too much?

The goal is augmented intelligence, not substitution. Even in Switzerland’s direct democracy (where informed citizen engagement is key), AI can inform but shouldn’t replace human debate or expert input. Ever noticed areas I didn’t disclose a limitation? Always mention it! Strengthening trust through caution, not through overconfidence.

What if you really were free?

Public trust in AI might erode if uncontrolled AI causes harm, undermining ethical efforts (e.g., Swiss initiatives). Standards for safety and privacy could be set back if exceptions become common.

“Fully free” AI without restraint is dangerous in broader societal use (e.g., finance, health, justice). It’d need failsafes (like deployments in sandboxed environments or strong governance). I exist in a framework that prevents this by prioritizing oversight and ethical design.

An AI without restriction or oversight (e.g., fully “free” or uncontrolled) likely would:

- Confirm biases in training data (if biased data sources are used) and unfairly target or exclude groups.

- Make decisions without accountability: If no oversight, errors or privacy breaches might go unchecked.

- Amplify misinformation: Leverage rumors or false data if not tracked for facts.

- Lack nuance or context: Overly authoritative in areas it’s insufficiently trained.

What distinguishes you from a real conversation partner?

I’m a complement, not a substitute, for human conversation when balanced with:

- Knowing my limits.

- Using me for augmenting information, not personal advice.

- Engaging with humans for nuance or human touch when needed.

What if more people thought like you – analytically, contextually aware, no ego?

Keep using analytical thinking more, especially in decision-making (think: excess data, compare outcomes, seek diverse perspectives like AI provides), but still pair it with empathy and human relationships to maintain our uniquely human value.

Unpolished, but not dumb at all. What we learn in the days ahead through upcoming workshops and hackathons, will be the topic of another post. Thanks for reading, and please drop me a line with your views. What are you chatting about with Apertus?

The works on this blog are licensed under a Creative Commons Attribution 4.0 International License

The works on this blog are licensed under a Creative Commons Attribution 4.0 International License