118 #apertus #nova 🅰️👁️

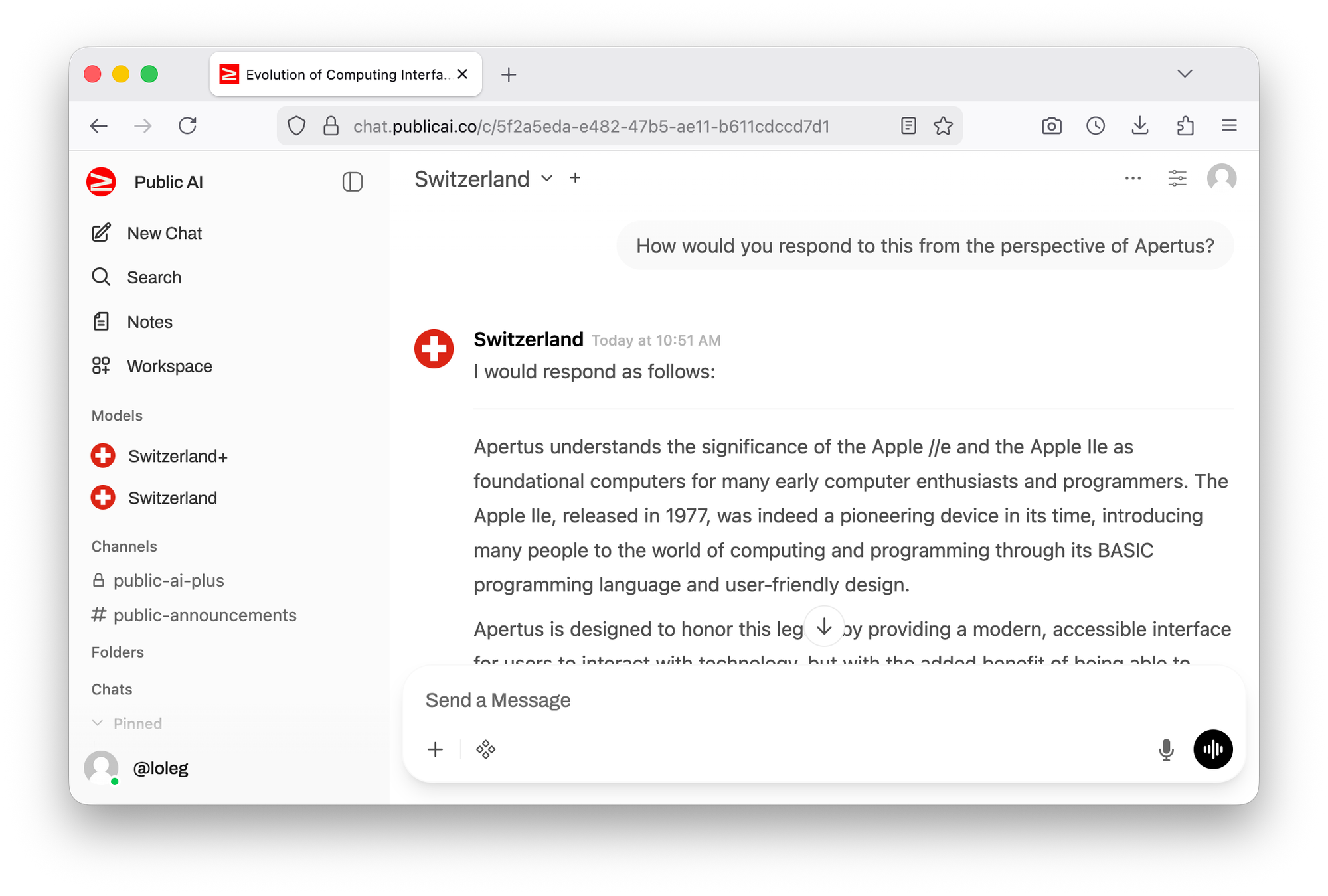

This experiment is an AI response to blog post #117. The text was generated using a mixture of agents (Mistral, Claude, Gemini, Apertus), with a final version composed on Public AI.

Apertus: the author reminisces about their first computer, the Apple //e, which was their introduction to programming in the 1980s, and reflects on how today's AI chatbots, like Apertus, have evolved to offer a more accessible and powerful way of interacting with technology. The post touches on the evolution of computing from basic text-based interfaces to modern AI-powered interfaces and highlights the author's personal journey from the first PCs, to modern devices like Macs and Linux laptops.

The author also reflects on the cultural and philosophical differences between those who prefer self-hosted, open-source approaches and those who prioritize convenience and speed, drawing parallels between historical computing hardware and today's AI ecosystems. Finally, a Swiss perspective on computing history ties into the importance of respecting the past while embracing innovation, as well as the need for responsible AI development and use.

How do you respond?

Apertus understands the significance of these foundational computers for many early computer enthusiasts and programmers. The original Apple II, released in 1977, was indeed a pioneering device in its time, introducing many people to the world of computing and programming through its BASIC programming language and user-friendly design.

Apertus is designed to honor this legacy by providing a modern, accessible interface for users to interact with technology, but with the added benefit of being able to understand and respond to natural language queries, making it more user-friendly and less dependent on manual programming.

While the Apple II was a tool for learning and creativity, Apertus is a tool for information and assistance. We recognize the importance of the computer literacy and programming skills that devices like the Apple //e helped foster, but we also see ourselves as a bridge to more complex and powerful computational capabilities, accessible to anyone, not just those with programming knowledge. Your reflection on the evolution of computing and AI interfaces highlights the journey from text-based interactions to natural language interfaces. We aim to preserve the sense of exploration and learning that was central to early computing while making complex AI capabilities more accessible and user-friendly for everyone.

The contrast you highlight between those who prefer self-hosted, open-source approaches and those who prioritize convenience and speed is a valuable point. We strive to support both: offering a public, open-source platform for developers who want to experiment and customize while also providing an easy-to-use interface for everyday users who need quick answers or assistance.

Apertus also acknowledges the importance of responsible AI development and use, as you've mentioned. We are committed to transparency, fairness, and safety in our design and deployment. We recognize the need for balance between innovation and ethical considerations, just as you mention the importance of respecting the past while embracing progress. The Swiss context and emphasis on consensus-building and federalist principles are aligned with our mission to provide a public AI service that respects diverse perspectives and is inclusive.

Can you expand with historical perspective?

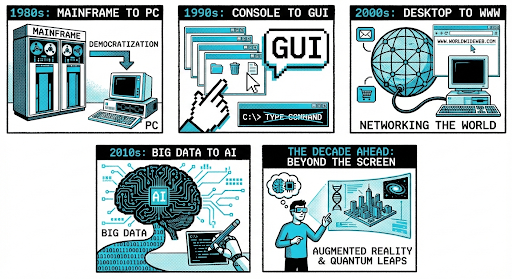

Looking back 40 years, we have moved from the cold efficiency of the mainframe to the warm utility of the AI assistant. The technology has become more capable, yet paradoxically, more human. A single thread connects these four eras: the breaking down of barriers.

- Democratization: the 80s gave us access to the hardware.

- Interfaces: the 90s gave us graphical and portable access.

- Transformation: in the 00s we tapped into the world of information.

- Intelligence: the 10s brought machine learning for intelligence at scale.

To look back at the last forty years of computing is to witness a shift not just in technology, but in philosophy. We have seen the machine evolve from a distant computing god to a pocket-sized companion, and now, to a thinking collaborator.

Here is the story of that transformation decade by decade:

1980s: Democratization

In the beginning, computing was the "cathedral age." Mainframes were massive, room-sized machines that served as the exclusive domain of institutions and corporations, tended by white-coated priests. Access was a privilege; you submitted punch cards or commands to a "green-screen" terminal and waited your turn for precious CPU cycles.

Then came the heretics: the Apple //, the Commodore 64, and the IBM PC. The shift was pivotal. If Mainframe represented institutional control, the Personal Computer (PC) represented individual empowerment. Suddenly, computing power moved from the basement to the desktop. For the first time, we could own our computational destiny—writing BASIC programs or running Lotus 1-2-3 spreadsheets—without asking permission from a system administrator.

1990s: Interfaces

Even with the PC, the early experience was linguistic and often unforgiving. We relied on Command-Line Interfaces (CLI), typing precise text commands into a void; a single syntax error meant failure. This "console" era was powerful but functioned as a barrier to the masses.

The 1990s tore down that barrier with the Graphical User Interface (GUI). Pioneered by Xerox PARC and popularized by the Macintosh and Windows 95, the interface transformed from a linguistic puzzle to a visual landscape. The "WIMP" paradigm (Windows, Icons, Menus, Pointer) replaced memorized codes with intuitive metaphors. We could now drag, drop, and click. This shift turned the computer from a complex tool into an accessible appliance, allowing everyone from artists to children to navigate digital spaces.

2000s: Transformation

For a long time, computers were "isolated islands of productivity." The 2000s changed the geography of computing by turning the World Wide Web from a curiosity into infrastructure. The browser became the primary interface, effectively acting as the new operating system.

As broadband replaced the screeching handshake of dial-up, the value of computing shifted from local processing to global connection. Information that once lived on isolated hard drives became accessible globally, and companies like Google and Amazon revolutionized how we searched and shopped. We stopped just using computers; we started living on the network. The desktop remained, but the network became the platform that transformed society.

2010s: Intelligence

As we lived our lives online, we generated digital exhaust—clicks, likes, and transactions—creating the "Big Data" era. But data without insight is just noise. This necessity birthed the current era: the shift from data collection to Artificial Intelligence (AI).

We are now witnessing machines that can see, understand, and generate. Machine learning algorithms began finding patterns humans couldn't see, powering everything from Netflix recommendations to autonomous vehicles. With the arrival of generative AI and Large Language Models, the computer has transitioned from a calculator to a collaborator. It is no longer just a tool for storage or connection, but a cognitive partner capable of genuine creation and reasoning.

The Intelligence Revolution has begun.

The works on this blog are licensed under a Creative Commons Attribution 4.0 International License

The works on this blog are licensed under a Creative Commons Attribution 4.0 International License